•

10-minute read

Learning about SEO is a bit of a challenge, isn't it? On the one hand, there is no single body of knowledge and the information has to be collected bit by bit from many different places. On the other hand, the information is often misinterpreted, giving rise to fake ranking factors and far-fetched theories. That's why to learn the truth about SEO, it's best to go to the very source — Google itself.

In the past, I have already discussed a few sources of SEO information at Google, namely the SEO Starter Guide and the Quality Raters Guidelines. Today, we will go even deeper and explore Google's Search Patents — documents that explain various aspects of how Google evaluates and arranges search results. Read on to learn what these patents are, why study them, and which ones can help you design a better SEO strategy.

Whenever Google invents a new way to improve search, it files a patent application with the United States Patent and Trademark Office (USPTO). The patents are technical documents that give detailed descriptions for various bits of the search algorithm. Their role is to give Google an edge over competing search engines by protecting innovative approaches to search from being copied.

It is important to mention that patented technologies are not necessarily a part of the search algorithm. There may be some delay between the patent being filed and the technology being implemented. It is also possible that the technology is never implemented, or that the patent goes through multiple iterations before reaching its final state. Filed patents are basically a collection of ideas that Google wants to protect, but may or may not actually use.

This aside, the patents offer a unique insight into the way the algorithm works — in many ways, it is the truest form of SEO knowledge. Studying patents allows you to anticipate upcoming algorithm updates and identify new and existing ranking signals. You can use this knowledge to future-proof your website as well as verify your current SEO strategy.

Patent applications and granted patents can be searched at the USPTO's official website — just add Google as the name of the applicant and look through file names. The problem is, there are thousands of patents filed by Google and most of them are not related to SEO. Furthermore, the patents are somewhat technical documents, and understanding them may take some getting used to. So, learning about patents in this way might not be the most efficient approach for a casual reader.

A better approach would be to follow patent enthusiasts — SEOs who monitor patent updates and have been recognized by the community as patent experts. They do the job of sorting through hundreds of patents each year only to select those few that really matter for SEO. While there have been a few patent experts over the years, the one person with the longest history of writing about Google's search patents is Bill Slawski, who highlights the most important updates in his personal blog — SEO by the Sea.

In this section, I will list some of the patents that describe novel and/or controversial optimization ideas and have practical implications for SEO managers. I will skip the patents that describe well-known ranking factors as well as the patents on the topics over which SEO managers have little to no control.

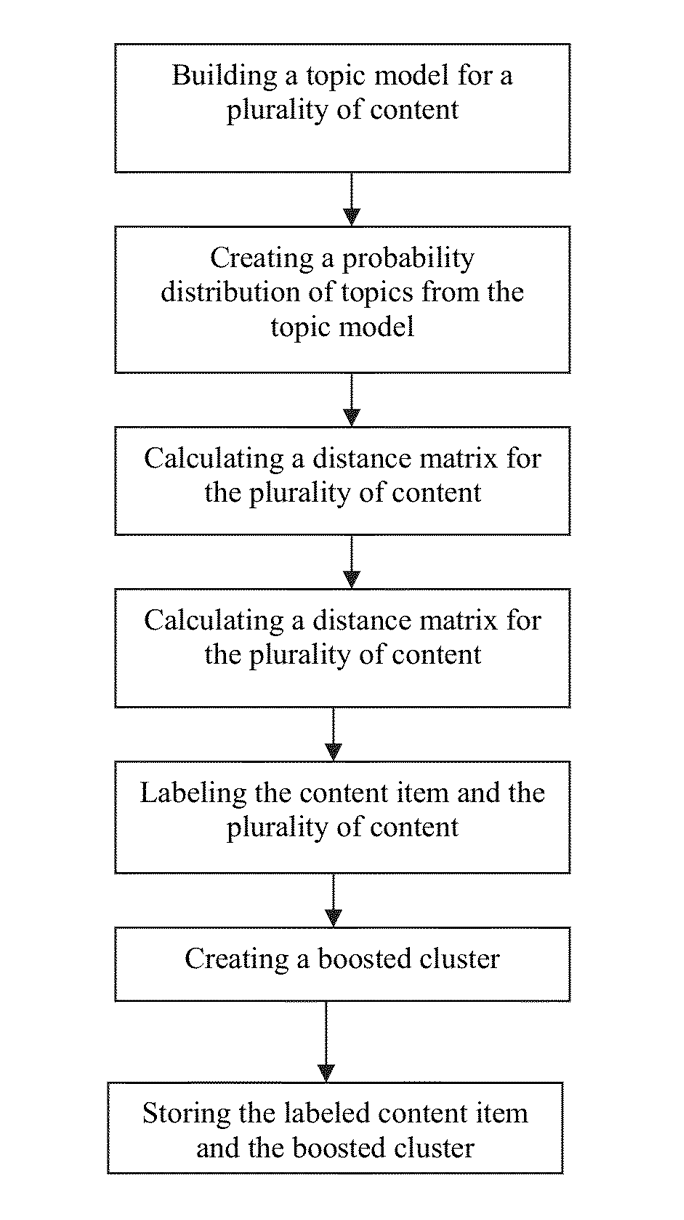

The patent describes grouping websites and pages by topic and creating something that can be described as expert clusters. Content from these clusters is then given priority when serving search results for a related query.

What's interesting, is that the content that does not belong to a cluster may be skipped by the search engine entirely, without any evaluation, regardless of whether it possesses any of the other content quality signals.

The obvious implication is that it is beneficial for SEO to build your website's content within a certain field and not to stray far from your main area of expertise. In practical terms, it would mean creating tiered content plans and arranging smaller pages around much bigger pillar pages.

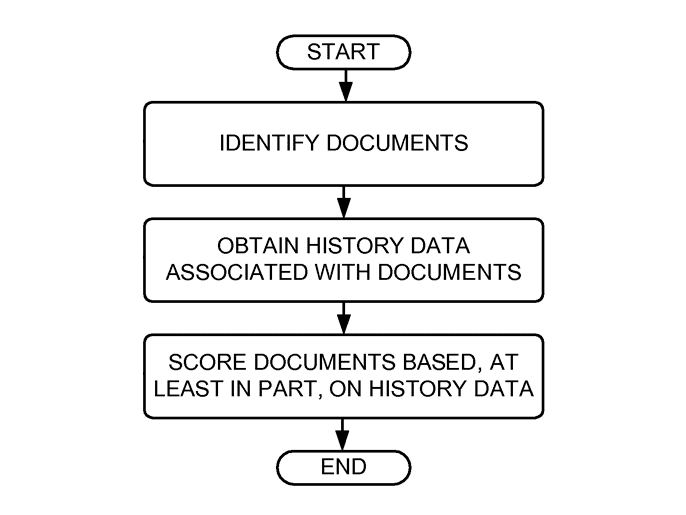

The patent discusses ranking a page, at least in part, based on how old it is. The age of the page can be determined using a variety of methods, but most commonly via the date it was first crawled.

The patent goes on to say that it may also use the age of the page to calculate the average link rate, i.e. to divide the total number of backlinks to a page by its age. The average link rate is then also used as a partial ranking factor.

While ranking a page based on its age is nothing new, the average link rate is the concept you don't hear about very often. What it means is that the older the page is, the lesser the weight of each individual backlink. So, if you want your page to rank, you have to keep adding more and more backlinks as it gets older. One way to achieve this is to create evergreen content, update it frequently, and recirculate it through your marketing channels.

Use SEO SpyGlass to keep tabs on your backlink profile, view all links to any given page, and borrow backlink ideas from your competitors.

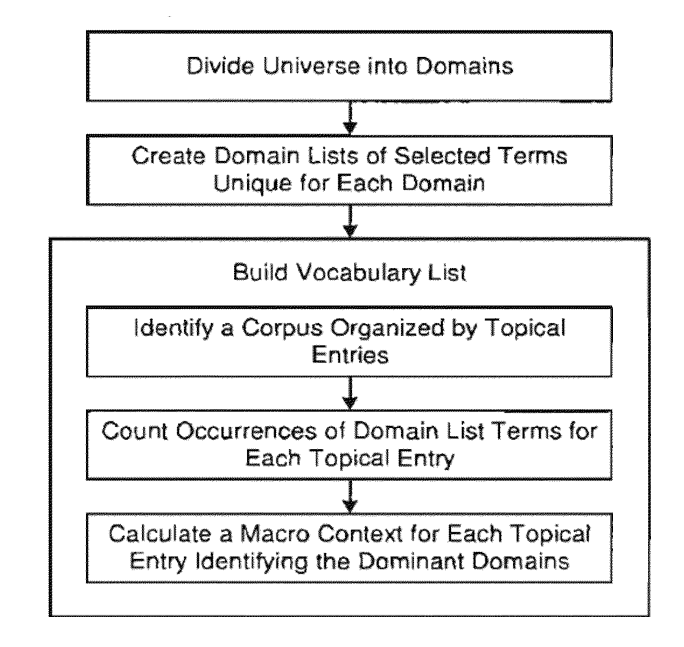

Over the years, Google has published a series of documents related to keywords, moving its ranking criteria from keywords to keyword phrases to context words. The latest one of these documents describes building topical vocabularies that go beyond keywords and include contextual words as well, i.e. words loosely associated with a topic.

As it stands now, it is possible that Google prefers pages saturated with context words as well as traditional keywords. For example, if you are creating a page about the best down jackets, Google might expect to see some of the less obvious terms like water, hiking, and goose.

Website Auditor has added the content editor feature that helps you write semantically rich copy. It analyzes top-ranking pages and provides you with a list of keywords as well as contextual words that have proven to work for your competitors.

From a patent on video watch times to a patent on website duration performance, it looks like Google might consider visit duration to be a ranking factor. The patents describe benchmarking visit durations for a particular type of content, and then ranking the page based on how well it performs against the benchmark.

Finding ways to keep your visitors engaged may be favorable for your rankings. An obvious way to achieve this is to create content that is of high quality, comprehensive, and includes a variety of media and interactive elements (images, videos, polls, comment prompts, etc.).

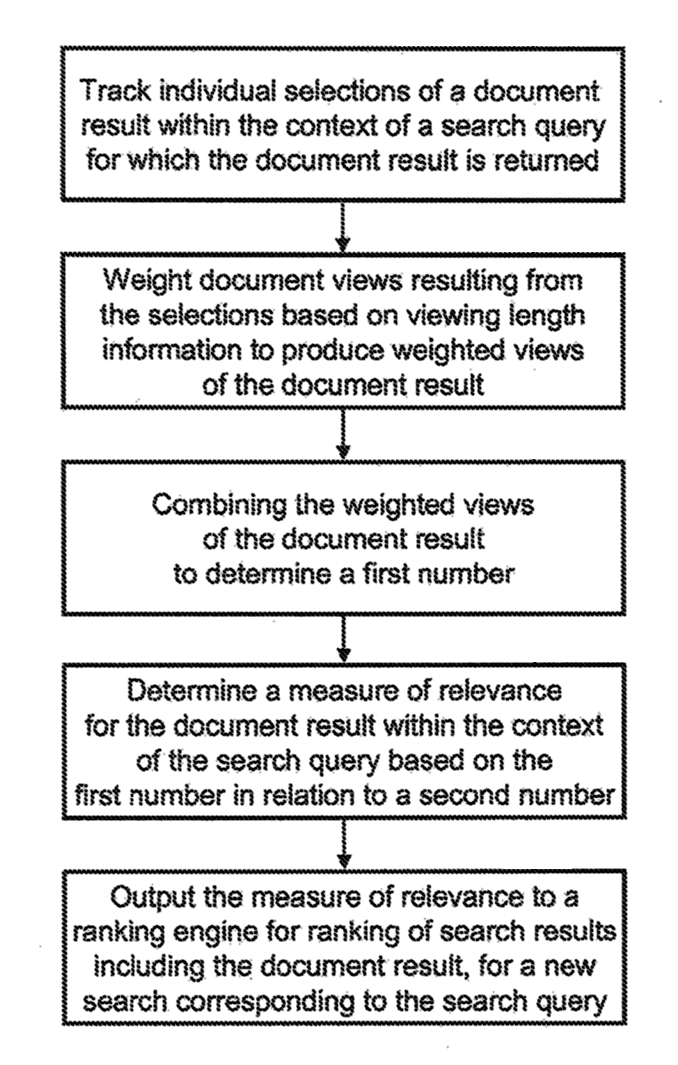

In the past, this patent used a simple click-through rate as a part of ranking search results but has been recently upgraded to use a weighted click-through rate. The newer version tries to find the middle ground between clicks and visit durations, which sounds a lot like a variation of a bounce rate. Basically, the more clicks your snippet gets, and the longer the user stays, the better.

There is always a debate on whether Google uses behavioral metrics to rank pages. Whatever the actual case, the technology is patented, so the opportunity is there. It would mean that you should take extra care to make your snippets irresistible in search results, from titles to meta descriptions to enhancing your snippet with structured data.

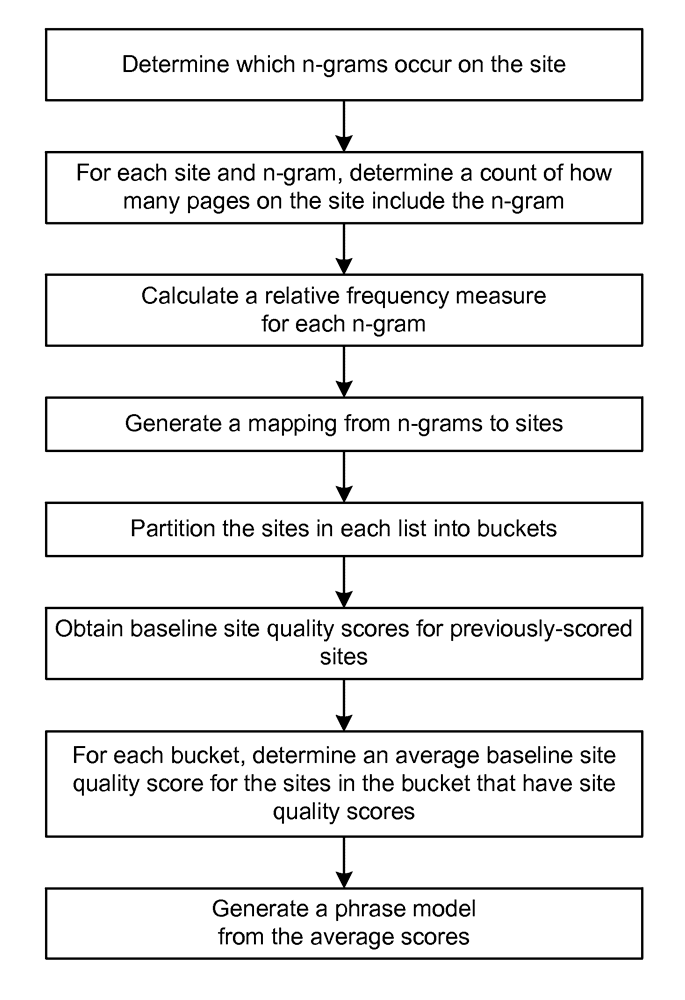

Google has filed a number of patents that use n-grams (word strings) to evaluate the quality of the copy. The way it works is the algorithm uses a set of pages of known quality to create a language model. It then uses the model on a new page to establish how similar the writing is to a quality benchmark and rank the page accordingly.

N-grams can be used to identify gibberish content, keyword stuffing, and low-quality writing. That means you should probably stay away from scraped, autogenerated content, and instead hire experienced writers or, at the very least, use proofreaders to polish your copy.

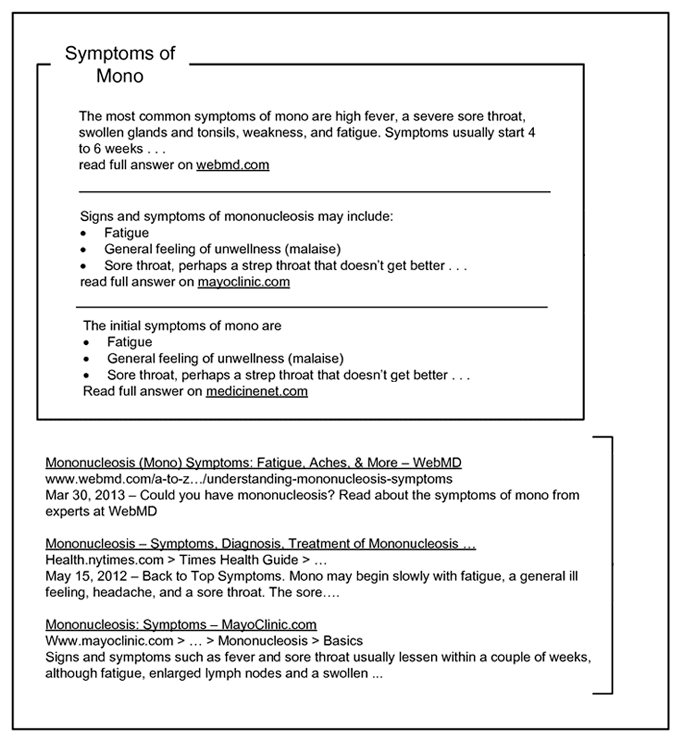

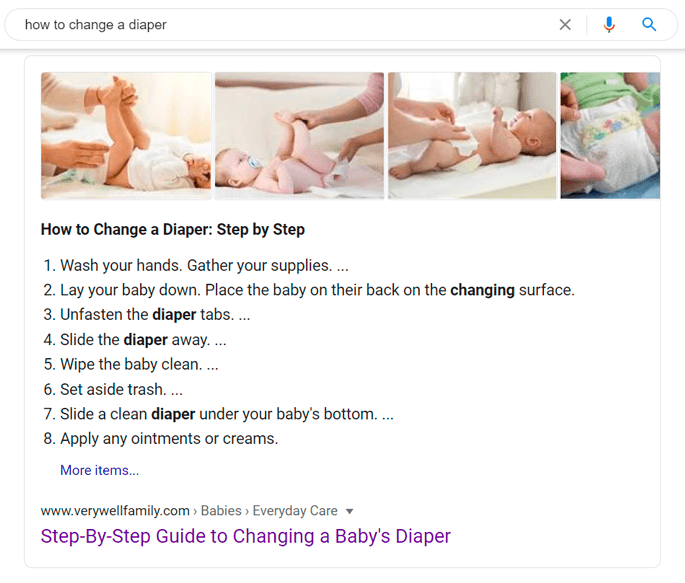

The patent describes the mechanism for determining featured snippet eligibility. Basically, whenever there is a natural language query with very clear intent, e.g. what are the seven deadly sins, Google would scan the top-ranking pages and look for a heading that sounds very similar to the query, followed by a concise answer, e.g. a list of seven sins.

So, how to get a featured snippet in search? Remember that each heading (H2-H6) within your copy has the potential to be used in a rich snippet. Basically, each of your headings has to be written as if it were a query (natural language search + keywords), and the text immediately after the heading should provide the answer to the query. Below is a perfect example of rich snippet material — one of the headings is an exact match for the query of how to change a diaper, and it is followed by a numbered list of steps:

This is one of the more mind-numbing patents to read, but it essentially boils down to how informative are your anchor texts. The patent describes looking at various user behavior indicators of how likely a user is to click on a link. The higher the likelihood, the more juice the link will pass.

Follow best practices when creating anchor texts for your backlinks, as well as internal links. Make sure the anchor is representative of the page it leads to, includes keywords, and is surrounded with contextual words.

Use Website Auditor to pull a list of anchors used on your own website, and use SEO SpyGlass to pull a list of anchors used to link to your site from other sites.

Another link-related patent gives us an insight into the value of individual backlinks. The patent describes a method for measuring link value by looking at how much traffic it brings. If the link is not getting clicked on by actual users, then it doesn't pass any value to the linked page.

When you are building backlinks, especially through guest posts, you are probably tempted to include as many links per post as possible. Well, according to this patent, you will be wasting your time because the links that are not getting clicked on are pretty much useless. So, you might as well include fewer links and increase the chances of each of them being clicked. Also, buying links from websites that no one visits is probably useless.

The patent describes rating local results based on weighted reviews from local experts. The experts are identified using thresholds for a number of reviews in total, number of local reviews, and number of reviews for a certain category of businesses. Google My Business does tag some reviewers as local guides, so it looks like this patent has been implemented at least partially.

While there is no way for you to solicit GMB reviews specifically from local guides, there are some ways you can encourage more of your customers to review your business. You can ask them in person when conducting business, send them a follow-up email, offer loyalty program rewards, or use social media to ask your clients to leave some feedback on GMB. And, if you see any of the local guides giving you a bad review, you'll have to make an extra effort to make them happy.

I'm a little out of my depth writing about Google's search patents, but I do find them fascinating. Some of them are even a little unnerving, like the ones that suggest using the phone's camera to see how users react to search results, or the ones that suggest listening to background noise (tv, conversations, etc.) and collecting query context. Although they do offer valuable insights into the problems that Google faces and the solutions that they try to work out. And, consequently, these insights help us deliver better content.

| Linking websites | N/A |

| Backlinks | N/A |

| InLink Rank | N/A |